Navigating the Waters of Docker

Updated: September 26, 2024

Published: May 23, 2018

Far too often software is stable on one machine, and then fails the moment it is moved somewhere else. Sometimes that’s by design, as in copyrighted software, but in today’s cloud-based world, portable code is essential to a successful service. As software for business becomes more and more critical, having a successful and dependable launch becomes vital. Reliability is key for customer service, and despite teams of people working together; transferring software from computer to computer remains a complicated problem to solve. Anyone who’s worked in software has seen a popular website go down, and the dirty truth is that sometimes that’s because of an update, rather than hardware even if that update was tested thoroughly on several developer machines.

In 2013, Docker was released as a solution to help. Docker brought the benefits of environment enforcement to the market in a way that was friendly and scalable. Each Docker container houses important environment artifacts in a way that allows you to deploy, scale, and standardize your software solutions. It’s for that reason that Docker, and containerized solutions in general, should be at the forefront of any developer’s toolbelt.

First, let’s dig into the concept a bit further. Ideally, if you had software running on your computer, you would want to make a copy of your computer and make that the server. This would ensure that your application works, and is completely doable via virtualization. That’s a huge copy of data though! You’re copying the operating system, tons of non-essential files, your internet history, photos, and music folders, etc. Ideally, you’d just copy over the parts that are essential for your app, so developers and servers would have smaller environments to manage. That’s where containers are ideal. In your container, you can identify software, environment variables, files, system libraries, and settings, but leave the underlying operating system, kernel, and non-essential files out. It’s all the setup for your software application that makes a container’s contents. For this reason, once a computer boots up, multiple containers can be activated quickly!

Having containerized your application not only means that you’re protected as an application, but it also protects your operating system and OTHER containers from crossover issues. The isolation of containers will make sure that software from one container will not accidentally interfere with the other. These encapsulation boundaries let teams of people work independently of one another while ensuring that their project will play nicely with many other projects on the same machine at the same time. This mentality allows your to break a complicated piece of software into microservices, and thus your project becomes more efficient and manageable. You can even use containers to add redundant services to ensure your app is scalable!

Containers are popularized but limited to Docker. Docker is an excellent choice, however, especially since Docker supports all major Linux distributions, Mac, Microsoft Windows, and even common cloud infrastructure such as AWS and Azure. The concept of containers has been around for a while, and multiple solutions have existed. In 2015, the Open Container Initiative formed industry standards for containerization, which allows a measure so that anyone can write their own container system to fit the open and supported standards delineated by the initiative. What this means to developers, is that containers existed before Docker, and even if Docker where to go away tomorrow, the Open Container Initiative is sponsored by AWS, Google, IBM, HP, Microsoft, VMWare, Red Hat, Oracle, Twitter, and HP. Containers are here to stay.

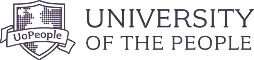

To get started, you need first to identify if containers fit the project you’re looking to utilize them in. When working in Docker containers, you’ll be passing instances of bundles of software around, and in a few small cases, that’s simply not doable. If you’re in consulting work where you can’t manage your client’s machines or working with Open Source, perhaps you should consider a more lightweight solution like Solidarity, which will merely check your environment, not write to it. But, if you’re able to enforce the use of containers, you start by installing Docker. As a developer, you’ll want to install the Community Edition (CE). As of the time of this writing that’s about 353 MB on Mac.

Screenshots from a simple OS X Install:

Once you’ve installed using your preferred operating system, we’ll need to open a terminal/command prompt and try out hello-world. This is very simple to do! In your terminal type `docker run hello-world`. If everything is installed correctly, you should get a friendly message letting you know you’ve pulled down a working version of “hello world” and it ran correctly on your machine. That’s your first Docker container! You can see it on your machine by typing `docker image ls`.

How did you get this docker image? How do you build your own? It’s simple. There’s a file called a “Dockerfile” which describes and declares the actions of the container. We can use this to identify our files, and how to correctly set up our app! And as for sharing, we can download public items from hub.docker.com. We can even create and add our own.

Hypothetically just by adding lines to your file, with pound signs (#) for comments, will allow you to add environment variables, copy files, and even use official runtimes. There’s a friendly example on the Docker website, but just for example here, let’s pretend we had a simple Node JS website. If we had our website inside a file named index.js, we could “Dockerize” our site with a Dockerfile that looked like so:

# use an official Node runtime as parent image, ensuring node version 7

FROM node:7

# set the working directory to /app

WORKDIR /app

# Copy all contents to app now

COPY . /app

# install needed node_modules

RUN npm install

# Tell it how to run the site

CMD node index.js

# Open up the port

EXPOSE 8081

You can then build the docker image with `docker build -t hello-world .` and then subsequently run it with `docker run -p 8081:8081 hello-world`. That’s it! We’ve manually coded our environment and running steps so we can utilize docker. If you want to share your docker image using Docker Hub, you can push the image to Docker Hub much like you would with git. Friends and coworkers could pull it down, simple as hello-world with the command `docker run <YOUR_DOCKER_HUB_USERNAME>/hello-world`. Easy right?

Now you can use containers quickly and easily in any project! In many ways, it makes your project easier to run, cleaner to compose, and shareable. This is just the beginning of the power of Docker. Keep in mind we haven’t covered load-balancing, deploying to clusters of machines, sharing resources between containers and so much more! The best way to enjoy those exciting features is to start interacting with Docker in your projects today.

Computer Science at UoPeople:

Learn more about our Computer Science degree programs, where you can learn the latest programming languages, and experiment various programming techniques and platforms.

To start preparing for your programming courses visit our Prepare for University section.

Sources:

Feature listing: https://www.docker.com/what-container

Open Container Initiative: https://www.opencontainers.org/

Docker wiki page on Container Technology Wiki: https://www.aquasec.com/wiki/display/containers/Docker+Containers